Throughout this series, we’ve discussed the importance of taking a population-based approach to care management. A population-based care management approach applies care services across the full continuum of health, for ALL patients, with the aim of providing appropriate interventions based on the individual’s current health status and unique needs – as opposed to focusing strictly on the high cost/high risk patients.

Such an approach requires a well-designed framework powered by advanced analytics. Included in this framework are three primary steps: population monitoring, clinical identification/screening, and performance measurement. Up to this point, we’ve covered population monitoring and clinical identification/screening. More details on these topics can be found in blogs 4 – 7. In this blog, we’ll be covering the third and final step – performance measurement.

What is Performance Measurement

Performance Measurement is the systematic collection of information about activities, characteristics, and outcomes of programs to make judgments about the program, improve program effectiveness, and/or inform decisions about future program development (CDC).

A well-designed framework helps us ask important questions like:

- Are we doing the right work?

- Can we make better decisions?

- Can we achieve better outcomes?

- Do our teams have the right resources?

- What’s the value in investing, do we continue?

- Are there unintended consequences?

The Foundation for an Effective Framework

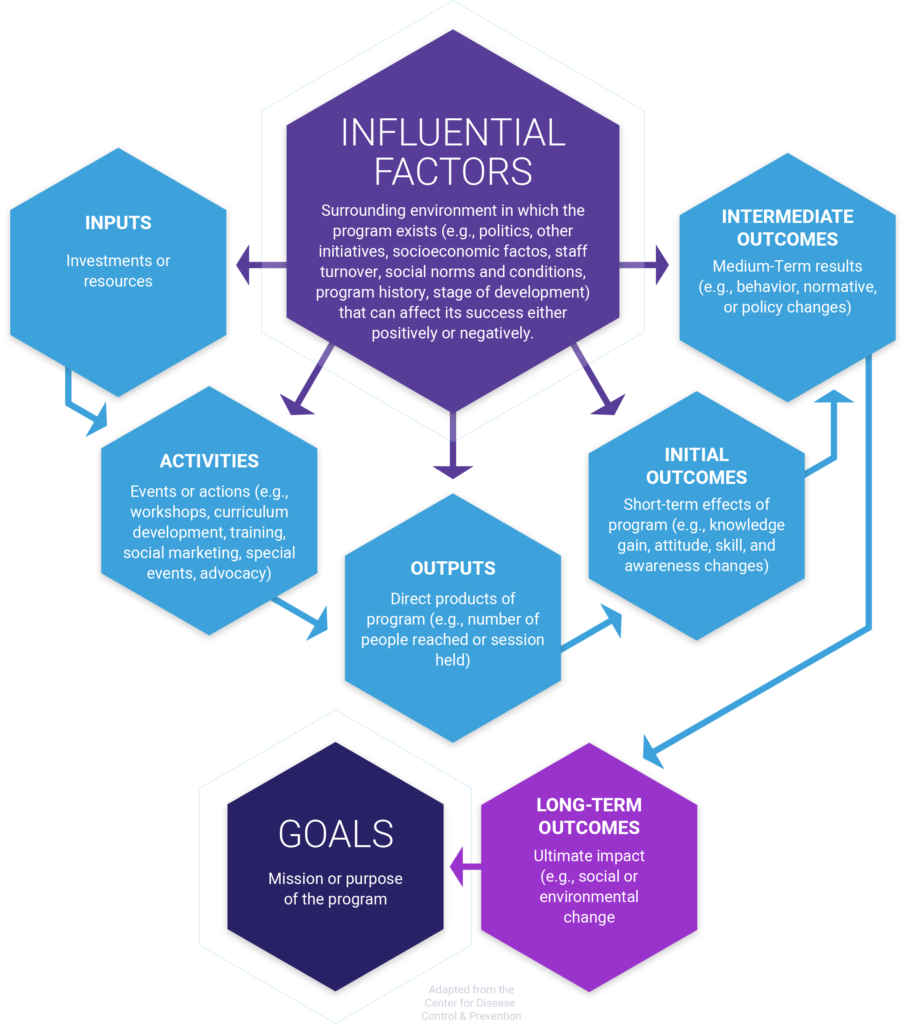

Designing and implementing effective strategies requires clearly defined program goals and objectives. One of the most important artifacts needed to create an effective framework is the logic model. A logic model is a charter that defines a program’s intended impacts and helps align the program across all phases of planning, implementation, management, and reporting.

The Logic Model

The logic model consists of six basic components: a goal statement, influential factors, inputs, activities, outputs, and outcomes.

Goal statement – a broad summary of the intended accomplishments of the program that will be used as a basis to drive overall direction and focus for the program, define what the program will achieve, and serve as the foundation for developing program strategies and objectives.

Influential factors – the external factors impacting the program (e.g., political environment, other initiatives, patient socioeconic influencers of health, program history, etc.).

Inputs – are the various resources available to support the program (e.g., staff, materials, curricula, funding, equipment)

Activities – are the active components of the program (e.g. develop or select a curriculum, write a plan, implement a curriculum, train educators, pull together a coalition). These are sometimes referred to as process objectives.

Outputs – These are the direct outputs of the program (e.g., how many individuals completed the program, how many evaluations conducted, how many check ins with the patient, etc. ).

Outcomes – are the intended accomplishments of the program. Outcomes should be segmented by initial, intermediate, and long-term or distal outcomes. Long-term outcomes are often discussed in terms of “impact” on the broader social, economic, or environmental change created).

Steps Involved in Performance Evaluation

Once we have a clear understanding of the problem/opportunity to be addressed and the objectives for how the program will address those things, we can shift focus towards reporting strategies.

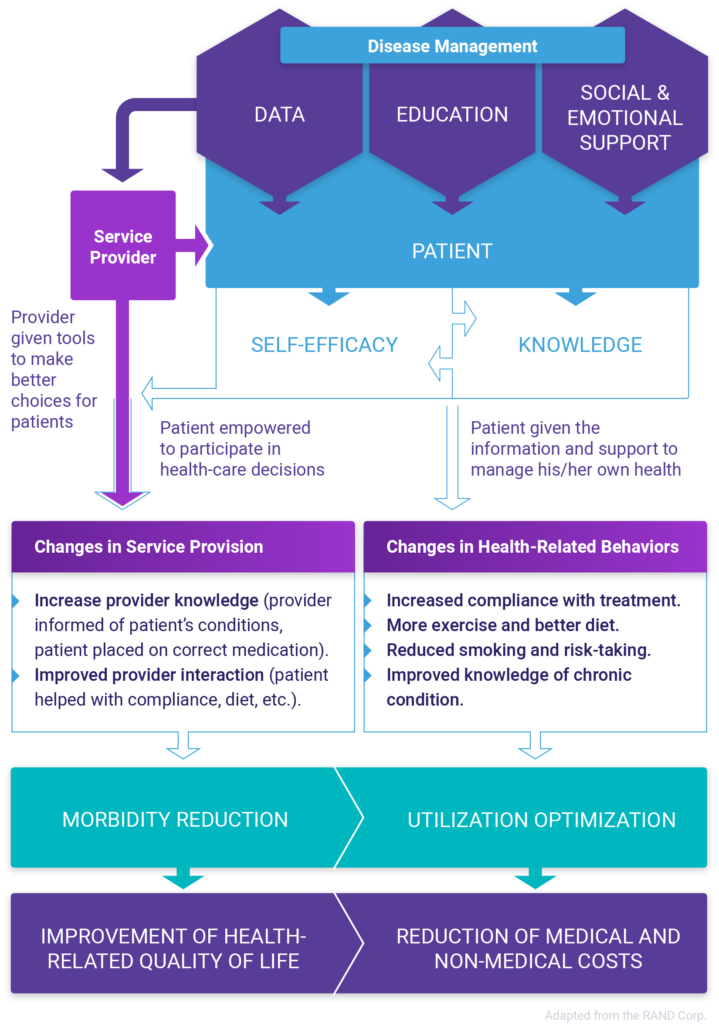

There are many established frameworks out there for conducting performance evaluations. The CDC’s Program Performance and Evaluation Office has good resources. The RE-AIM Framework is great for evaluating intervention programs. The RAND Corporation produced a comprehensive framework for chronic disease management. Regardless of which framework selected, there are typically four primary steps involved including planning, monitoring, analyzing, and reporting.

Planning: “What is the intent/objective(s) of the program, intervention, etc?”

- State the problem/opportunity

- Define the goals & objectives

- Construct the logic model & framework

Monitoring: “Are we capturing the right information at the right level of detail?

- Gather baseline data

- Track expenditures of time and resources

- Collect data on interventions and the short/long term anticipated outcomes

Analyzing: “Did we achieve the intended outcomes? Was it worth the investment?”

- Analyze and compare data with the baseline

- Interpret findings

- Identify implications of findings

Reporting: “Who needs the information and how will it be used?

- Organize findings

- Draft conclusions

- Disseminate information

Characteristics of a Well-Designed Framework

A well-designed framework has several characteristics. It includes a comprehensive set of measures; it’s relevant to decision-making; it includes measures that are readily available, established, and scientifically sound; it’s feasible in terms of accessing data and the effort required to develop and implement the metrics.

Comprehensive measurement – Understanding how a program or intervention is performing requires comprehensive measurement including insights into processes, outputs, and outcomes. The ability to measure across these three dimensions provides the insights needed to evaluate program efficiency, capacity, effectiveness, and impact.

Relevance – Measures should be limited to those needed for decision-making. When determining the relevance of a metric, we need to ask ”Is this a key element needed for decision-making?… Is the measure of sufficient interest to stakeholders that will ultimately be consuming the report?… Is it worth the time and effort needed to develop, implement, and evaluate the metric?”

Availability – When possible, measures should be evidence-based. It’s best to use metrics that are established and have been vetted by the healthcare community. In the case of care management/disease management, there has been a significant amount of work to develop these metrics especially in evaluating outcomes-based measures. The Healthcare Effectiveness Data and Information Set (HEDIS) measure set is a great place to start. The National Quality Forum (NQF) also offers a library of measures to choose from for evaluating disease prevention and management.

Sustainability – Measures should be sustainable to maintain in terms of collecting and managing the data needed to evaluate performance. Feasibility and complexity are two components that should be considered when selecting measures. Sustainability should be considered jointly with the relevance of the measure. Ultimately you want to select measures that are sustainable with a high rate of relevance.

Selecting the Right Measures

A meaningful framework should make it possible to answer the question “Does it make sense to invest in the program?”. This requires the ability to measure several components including processes/inputs, outputs, outcomes, and return on investment (ROI). Outcomes should be further evaluated by looking at initial outcomes, intermediate outcomes, and long-term outcomes. Provided below is an overview of each type of measurement, and a few examples of measures offered from the RAND corp performance evaluation framework that could be helpful when evaluating a care management program.

Process-based measures

Processed-based measures are focused primarily on skills, training, and capacity. These measures look at the inputs and activities included in the program to ensure that appropriate resources/support are allocated to the program. These might include:

- The number of resources dedicated to the program.

- The skillset, knowledge, and abilities of the resources delivering the services.

- The expected resource utilization or risk level of each patient.

- The number of patients potentially eligible for engagement under a program or specific set of services.

- The panel size for each clinician assigned patients.

- The number of steps/time involved in a particular workflow.

- Feedback from participants/partners about the program.

Output-based measures

Output-based measures are focused primarily on throughput, productivity, and efficiency. These measures look at the outputs from the program. These might include:

- The proportion of participants participating in screenings or risk assessments.

- The proportion of participants who completed the program.

- The proportion of participants participating in health education or skill activities development.

- The proportion of participants that receive and followed up with referrals.

- The proportion of patients enrolled in health promotion or disease prevention programs.

- The proportion and types of educational materials produced for the program.

- The proportion of key stakeholders involved in the program.

- The proportion of people aware of program messaging and intend to take action.

Initial outcomes-based measures

Initial Outcomes-based measures are focused on the immediate outcomes/changes that result from the program. In the case of measuring care management outcomes, this might focus on changes to service delivery and health-related behaviors. In a care management framework, these measures should be condition-based and established from evidence-based practice. These might include:

- The proportion of daily smokers.

- The proportion of daily smokers who quit successfully </>1 year.

- The proportion of participants engaging in at least 20/60 minutes of rigorous exercise daily.

- The proportion of overweight participants.

- The proportion of obese participants.

- The proportion of overweight and obese participants lost at least 10% of their body weight over a year.

- Do care teams know which of their patients have at least 1 chronic illness?

- Has time and interaction between the care team and patient increased?

- The proportion of patients that received appropriate preventative and diagnostic screenings/tests.

- The proportion of patients that received appropriate treatment for their diagnosed conditions.

Intermediate outcomes-based measures

Intermediate outcomes-based measures are focused on the mid-range outcomes/changes that result from the program. In the case of measuring care management outcomes, this might focus on changes to utilization and morbidity reduction. As in the case of immediate outcomes-based measures, these should be condition-based and established from evidence-based practice. These might include:

- Has there been a change to the overall calculated relative health risk?

- Has there been a change to overall avoidable ED visits and hospitalizations for preventable exacerbations of chronic illness, preventable complications of treatment, and preventing new health problems from developing?

- Has there been a change to the overall number of physician office visits per 1000 participants per year?*

- Has there been a change to the overall number of ER visits per 1000 participants per year

- Has there been a change to the overall number of hospital admissions per 1000 participants per year?*

- Has there been a change to the overall number of hospital days per 1000 participants per year? *

- Has there been a change to the overall number of drug claims per 1000 participants per year? *

- Has there been a change to unnecessary tests and procedures?

- Has care coordination/complexity for patients who are treated across multiple physicians, facilities, and services improved?

- Has the use of lower-cost, more accessible settings and methods for care delivery increased?

- Has the referral to lower-cost, high-value providers increased?

- Has prevention and early diagnosis increased?

*overall, overall observed minus expected, by condition

Long-term outcomes-based measures

Long-term outcomes-based measures are focused on the long-range outcomes/changes that result from the program. In the case of measuring care management outcomes, this might focus on the reduction of costs and improvement of health-related quality of life. As with other outcomes-based measures, these should be condition-based and established from evidence-based practice. These might include:

- Has the total medical cost PMPM (overall, overall observed minus expected,

- by condition) decreased?

- Has the total prescription drug cost PMPM (overall, overall observed minus

- expected, by condition) decreased?

- Has the total inpatient cost PMPM (overall, overall observed minus

- expected, by condition) decreased?

- Has employee absenteeism decreased?

- Has the rate of people felt hindered in performing common mental, physical, and interpersonal activities and meeting demands due to their illness improved?

- Has the rate of patient and provider satisfaction improved?

Return on Investment

Return on investment can be difficult to quantify, especially in a care management program. Check out our blog, “Five steps to understanding ROI in care management”to see how predictive analytics and a simple economic impact model can be helpful to quantify potential returns.

Interpreting the Research

Prior to communicating results, program managers/teams should conduct data analysis and interpretation of findings. Caution must be taken when interpreting the results of an to avoid overstated or indefensible claims of success. Exacerbated or unwarranted claims can result in loss of credibility. This process involves exploring association, and causation, understanding how compounding factors can skew the results, as well as defining how measures are calculated.

Association

The association exists when one event is more likely to occur because another event has occurred. Although an association may exist, it’s important to recognize that one event does not necessarily cause the other. For example, a patient enrolled in a complex care management program who does not use the Emergency Department (ED) for treatment would support an association between care management and the reduced incidence of ED usage. However, even though patients enrolled in a complex care management program and lower rates of ED use may co-occur, there is no evidence showing that it is care management that causes reduced ED visits.

Causation

Causation exists when one event is necessary for a second event to occur. This is often referred to as a Cause & Effect relationship. Determining cause and effect is very challenging due to all of the many confounding variables that can determine the outcome of a situation; this is especially true in care management where so much is determined based on individual patient behavior.

Confounding

Confounding exists when a relationship between two events has been potentially distorted by other factors. For example, changes in utilization and spending can result from many factors aside from care management, like benefits design, new treatments hitting the market, changes in the patient mix, concurrent interventions, etc.

While it is nearly impossible to rule out the influence of confounders, additional information should be gathered and analyzed to help understand where limitations in the data or measure calculations may exist. This should include any factors that potentially influenced outcomes.

In summary

Performance measurement can be difficult especially when it comes to evaluating program impacts (i.e., outcomes and ROI). Just because it’s difficult, doesn’t mean it shouldn’t be done. Without performance measurement, we wouldn’t be able to ask the important questions like: Are we doing the right work? Can we make better decisions? Can we achieve better outcomes? Do our teams have the right resources? What’s the value in investing, do we continue? Are there unintended consequences? For more information about Inflight Health’s approach to population based care management program and our performance evaluation framework check out our other blogs as part of this series or book a call with a member of our team to learn more.